Your workbench for AI engineering

Version, test, and monitor every prompt and agent with robust evals, tracing, and regression sets. Empower domain experts to collaborate in the visual editor.

Trusted by companies like you

Prompt management

Visually edit, A/B test, and deploy prompts. Compare usage and latency. Avoid waiting for eng redeploys.

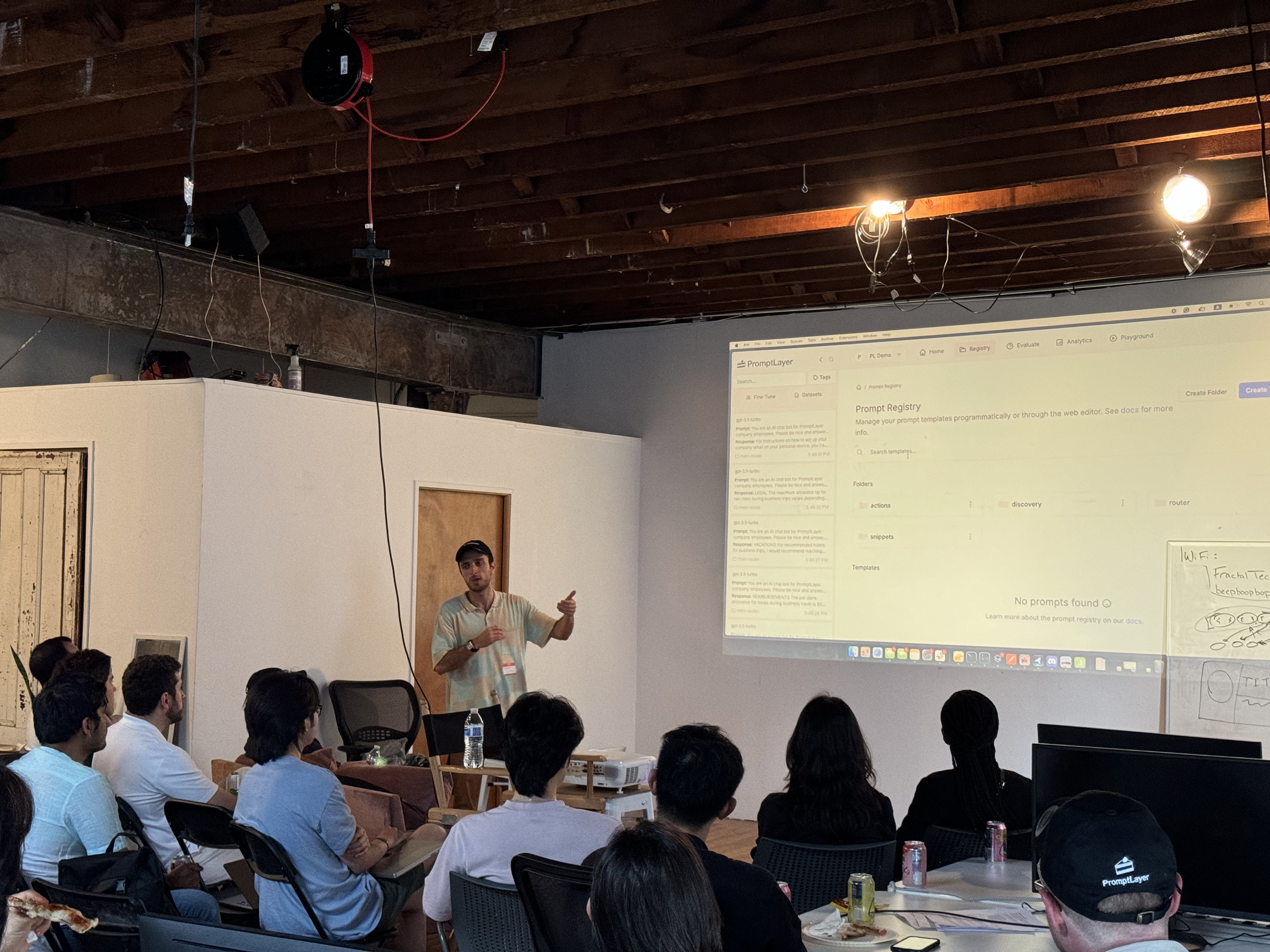

Collaboration with experts

Open up prompt iteration to non-technical stakeholders. Our LLM observability allows you to read logs, find edge-cases, and improve prompts.

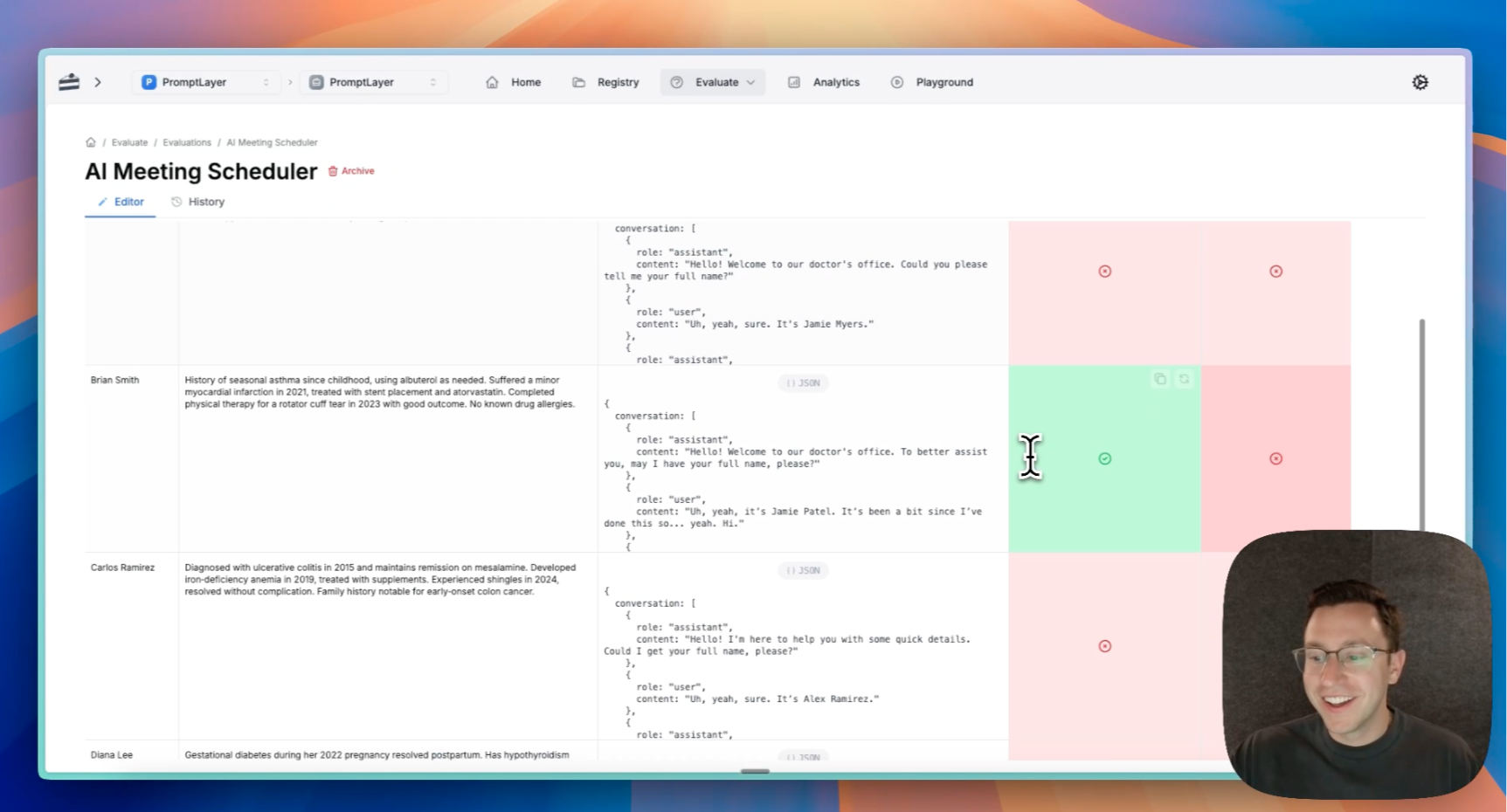

Evaluation

Evaluate prompts against usage history. Compare models. Schedule regression tests. Build one-off batch runs.

Case Studies

Prompt with experts

Prompt with actions

Building good AI is about understanding your users. That's why subject matter experts are the best prompt engineers.

No-code prompt editor

Update and test prompts directly from the dashboard.

Include non-technical stakeholders

Enable product, marketing, and content teams to edit prompts directly.

Avoid engineer bottlenecks

Decouple eng releases from prompt deploys.

Version prompts

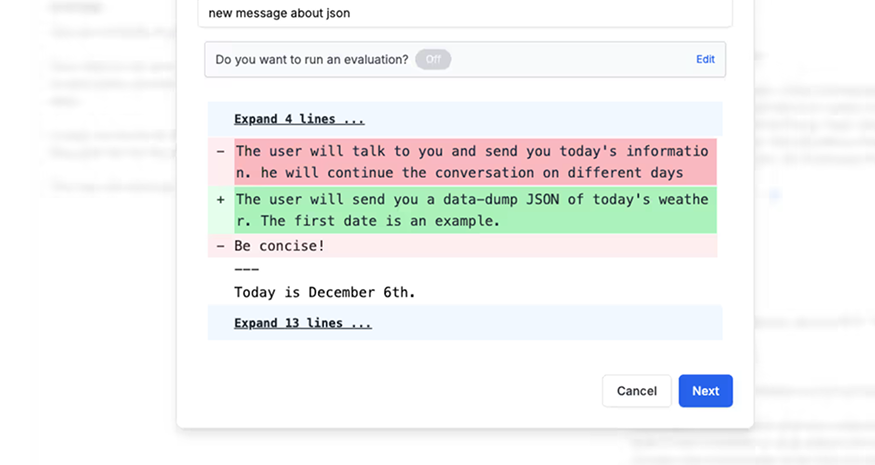

Edit and deploy prompt versions visually using our dashboard. No coding required.

Organize versions

Comment, write notes, diff versions, and roll back changes.

Deploy new prompts

Publish new prompts interactively for prod and dev.

Clean up your repo

Prompts shouldn't be scattered through your codebase.

A/B test prompts

Release new prompt versions gradually and compare metrics.

Evaluate iteratively

Rigorously test prompts before deploying, with the help of human and AI graders.

Historical backtests

See how new prompt versions fair against historical data.

Regression tests

Trigger evals to run every time a prompt is updated.

Compare models

Test prompts against different models and parameters.

One-off bulk jobs

Run prompt pipelines against a batch of test inputs.

Monitor usage

Understand how your LLM application is being used, by whom, and how often. No need to jump back and forth to Mixpanel or Datadog.

Cost, latency stats

View high level stats about your LLM usage.

Latency trends

Understand latency trends over time, by feature, and by model.

Jump to bug report

Quickly find execution logs for a given user.

Use cases

Personalized

language tutor apps

Busuu uses LLMs to provide every user on their app personalized language learning feedback for their speaking and conversational skills. The team iterates on feedback prompts that are stored in PromptLayer to tailor the right voice, run batch evaluations to examine feedback usefulness, and compare different models against eachother.

"We use PromptLayer to evaluate changes to our instructions and compare the output across prompt versions and models to make sure our learners receive accurate and useful feedback to help them on their journey."

— Hannah Morris (Head of Learning Design @ Busuu)

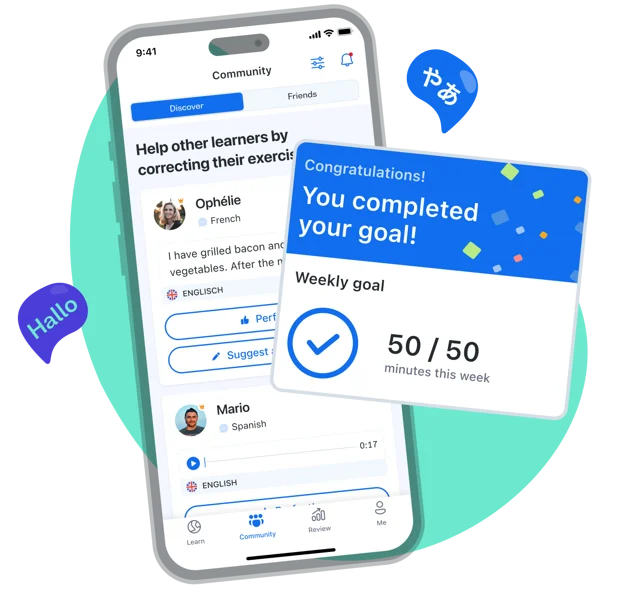

Automated AI sales

outbound

We use PromptLayer internally to build PromptLayer. Every time someone new signs up, it kicks off a PromptLayer agent that qualifies the lead, researches the company, and writes a highly-personalized outreach email. We spent hours in the dasbhoard versioning, tweaking, and test running the email writing prompt until it just right.

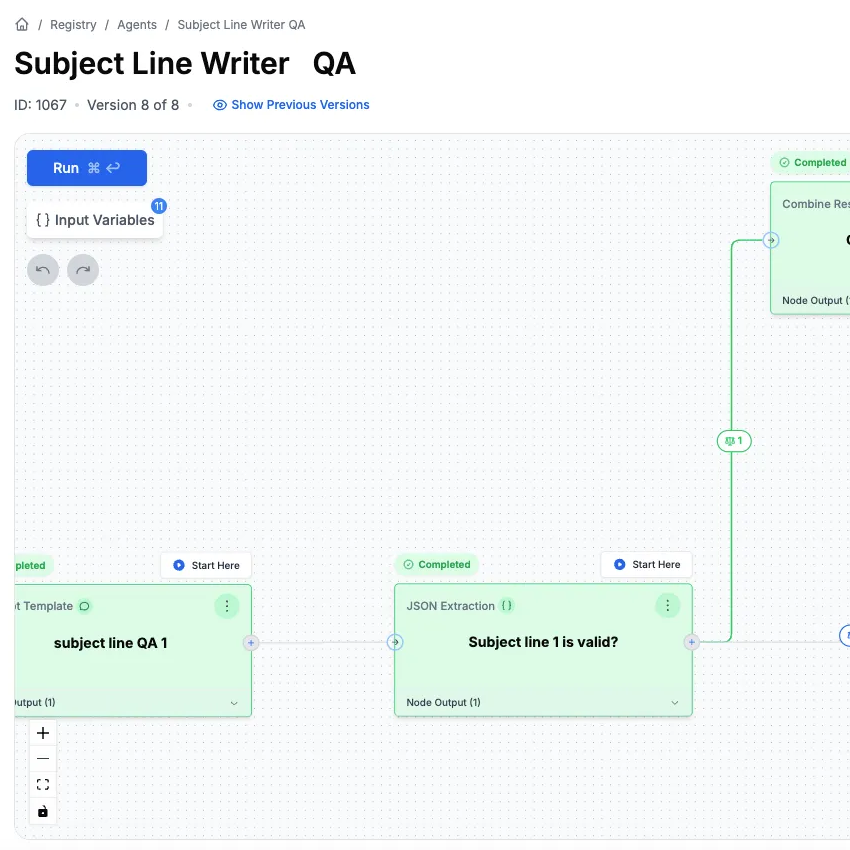

E-commerce

customer support

Gorgias has built an AI-powered customer helpdesk for Shopify stores. Their team of machine learning engineers and support specialists use PromptLayer to ensure that every user interaction is resolved successfully— refining prompts, replaying edge-cases, running regression evals, and surveying live traffic.

All their prompts, agents, tool calls are stored and iterated on from within PromptLayer.

What users are saying

Collaborate without engineering

Move your prompts out of code and serve them from our CMS. Enable subject matter experts, like PMs or content writers, to edit and test prompt versions all through the PromptLayer dashboard.

Our thoughts on prompt engineering and context design

Prompt Management And Collaboration Using A CMS

.png)

Our Favorite Prompts From The Tournament

.png)

Prompting Tips For Anthropic Claude

.png)

Building Better AI Systems

.png)

Prompting Tips For Anthropic Claude

Model agnostic

One prompt template for every model.

Prompt engineering pioneers

We are building a community for the real builders of AI: the prompt engineers. They come in all shapes and all sizes. Lawyers, doctors, educators, and even software engineers.

Get Started

.png)

The First Platform Built For Prompt Engineering

.png)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)